Mistral AI, in collaboration with All Hands AI, unveiled Devstral, a groundbreaking open-source agentic LLM designed specifically for software engineering tasks. With a remarkable 46.8% score on the SWE-Bench Verified benchmark, Devstral has emerged as the top-performing open-source model in its category, surpassing both open-source and proprietary competitors. This blog dives deep into Devstral’s capabilities, its collaboration with All Hands AI, its performance on SWE-Bench, and its potential to reshape software development.

Cracking Data Science Case Study Interviews is a practical guide featuring 20+ real-world case studies across Fintech, Finance, Retail, Supply Chain, and eCommerce to help you master the system design-style interview rounds every data scientist faces.

Cracking Data Science Case Study Interview: Data, Features, Models and System Design

The Collaboration: Mistral AI and All Hands AI

Mistral AI, a Paris-based research lab, has established itself as a leader in building efficient and high-performing LLMs, such as the well-regarded Codestral, a 22-billion-parameter model optimized for code generation across over 80 programming languages. All Hands AI, the creators of the OpenHands framework and Open Devin, brings expertise in agentic coding scaffolds, enabling AI models to interact with codebases and execute complex, multi-step tasks. Together, these two organizations have combined their strengths to create Devstral, a 24-billion-parameter model tailored for real-world software engineering challenges. This collaboration leverages Mistral’s optimization expertise and All Hands AI’s agentic framework to deliver a model that goes beyond traditional code generation. Devstral is designed to act as a full-fledged software engineering agent, capable of navigating large codebases, understanding project structures, and resolving intricate issues. Released under the permissive Apache 2.0 license, Devstral is freely available for both commercial and non-commercial use, making it accessible to developers, enterprises, and researchers worldwide.

What Makes Devstral Unique?

Unlike traditional LLMs that excel at atomic tasks like writing standalone functions or providing code completions, Devstral is purpose-built for agentic coding. This means it can handle complex, multi-step tasks that require contextual awareness across entire codebases. Here are some of Devstral’s standout features:

- Agentic Workflow Support: Devstral integrates seamlessly with agentic frameworks like OpenHands and SWE-Agent, enabling it to perform tasks such as exploring codebases, editing multiple files, and powering autonomous coding agents. These scaffolds provide a structured interface for the model to interact with test cases and execute development workflows.

- Large Context Window: Fine-tuned from Mistral Small 3.1, Devstral boasts a 128,000-token context window, allowing it to process and understand large codebases or extended conversations in a single pass. This is particularly valuable for projects with intricate dependencies or sprawling repositories.

- Lightweight and Efficient: With only 24 billion parameters, Devstral strikes a balance between power and efficiency. It can run on modest hardware, such as a single NVIDIA RTX 4090 GPU or a Mac with 32GB of RAM, making it ideal for local deployment and privacy-sensitive applications.

- Text-Only Design: To optimize for coding tasks, Devstral removes the vision encoder present in Mistral Small 3.1, focusing exclusively on text-based inputs. It uses a Tekken tokenizer with a 131,000-word vocabulary, enhancing its precision in handling code and text inputs.

- Open-Source Accessibility: Released under the Apache 2.0 license, Devstral is available for free on platforms like Hugging Face, Ollama, Kaggle, Unsloth, and LM Studio. It can also be accessed via Mistral’s API under the name devstral-small-2505 at a cost of $0.1 per million input tokens and $0.3 per million output tokens.

- Enterprise-Ready Customization: For organizations with specific needs, Mistral offers fine-tuning and customization options, allowing Devstral to be tailored to private codebases or specialized workflows.

These features make Devstral a versatile and powerful tool for developers, enabling everything from local experimentation to enterprise-grade deployments.

Unmatched Performance on SWE-Bench Verified

The SWE-Bench Verified benchmark is a gold standard for evaluating coding agents, consisting of 500 real-world GitHub issues manually screened for correctness. These issues test a model’s ability to navigate codebases, understand issue descriptions, and implement fixes that pass unit tests, mirroring the challenges developers face in practice. Devstral’s performance on this benchmark is nothing short of impressive:

- 46.8% Score: Devstral achieved a 46.8% success rate on SWE-Bench Verified, outperforming all other open-source models by more than 6 percentage points.

- Outpacing Larger Models: Under the same test scaffold (OpenHands), Devstral surpassed significantly larger models like DeepSeek-V3–0324 (671B parameters) and Qwen3 232B-A22B.

- Beating Proprietary Models: Devstral outperformed closed-source models like GPT-4.1-mini (by over 20%) and Claude 3.5 Haiku, cementing its position as a leader in the coding agent space.

This performance highlights Devstral’s ability to handle real-world software engineering tasks with precision and efficiency. For example, in one demonstration, Devstral analyzed test coverage in the mistral-common repository, set up the testing environment, ran coverage tests, and generated visualizations like distribution charts and pie graphs — all autonomously. Another example showcased Devstral building a web-based game combining Space Invaders and Pong mechanics, demonstrating its ability to handle complex, multi-file tasks.

Devstral’s Evolution: Small 1.1 and Medium

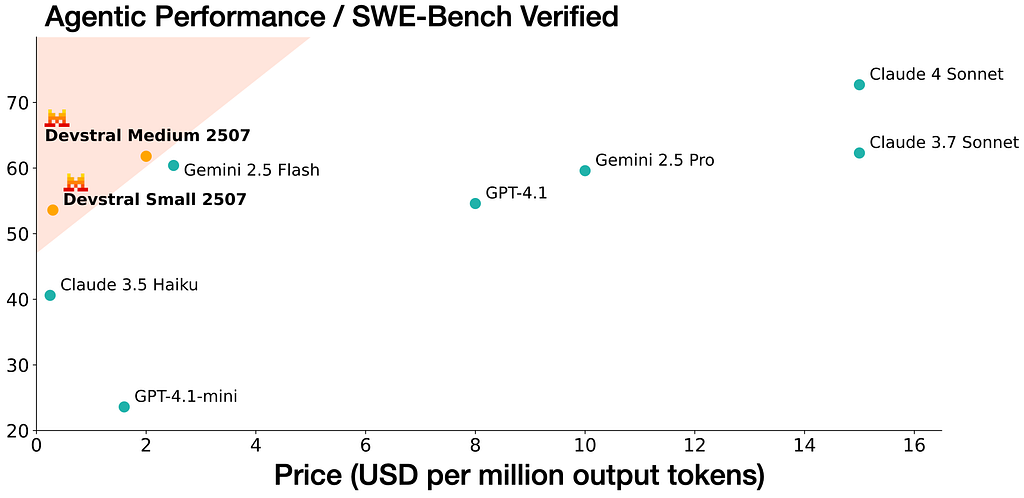

Since its initial release, Mistral and All Hands AI have continued to enhance Devstral. On July 10, 2025, they introduced Devstral Small 1.1 and Devstral Medium, further pushing the boundaries of agentic coding:

- Devstral Small 1.1: This updated version maintains the 24-billion-parameter architecture but achieves a 53.6% score on SWE-Bench Verified, a 5.6% improvement over the original Devstral. It remains open-source under the Apache 2.0 license and is optimized for local deployment.

- Devstral Medium: Available through Mistral’s API, this larger model scores an impressive 61.6% on SWE-Bench Verified, surpassing proprietary models like Gemini 2.5 Pro and GPT-4.1 at a fraction of the cost. It supports enterprise-grade customization and on-premise deployment for enhanced privacy.

These updates demonstrate Mistral’s commitment to continuous improvement and their focus on delivering cost-effective, high-performance solutions for developers and businesses.

Practical Applications of Devstral

Devstral’s agentic capabilities make it a game-changer for a wide range of software engineering tasks. Here are some practical applications:

- Codebase Exploration: Devstral can analyze large repositories, identify dependencies, and understand project structures, making it ideal for onboarding new developers or auditing existing codebases.

- Multi-File Editing: It can perform iterative modifications across multiple files, such as updating package versions or refactoring code, with minimal human intervention.

- Bug Fixing and Feature Development: Trained on real GitHub issues, Devstral excels at resolving bugs and implementing new features that pass unit tests, streamlining development workflows.

- Integration with Development Tools: Through scaffolds like OpenHands, Devstral can execute code, run tests, and iterate on solutions within existing development environments, enhancing productivity.

- Privacy-Sensitive Deployments: Its lightweight design and open-source nature make it suitable for on-device use or deployment on private infrastructure, ensuring data security for sensitive codebases.

For example, developers can use Devstral to automate tasks like generating documentation, creating unit tests, or transforming code blocks based on natural language instructions. Enterprises can integrate Devstral into their IDEs via Mistral Code, a platform that combines Devstral with other Mistral models like Codestral for end-to-end AI-powered development.

How to Get Started with Devstral

Getting started with Devstral is straightforward, thanks to its accessibility and compatibility with various platforms. Here’s how developers can begin:

— Local Deployment:

- Download Devstral from platforms like Hugging Face, Ollama, Kaggle, Unsloth, or LM Studio.

- Use a Runpod instance with GPU support or a local machine with an NVIDIA RTX 4090 or Mac with 32GB RAM.

- Run Devstral with the vLLM library for production-ready inference pipelines or use the mistral-chat CLI for simple interactions.

— API Access:

- Access Devstral via Mistral’s API under the name devstral-small-2505 or devstral-small-2507 (for Small 1.1).

- Pricing is $0.1 per million input tokens and $0.3 per million output tokens. Create a Mistral account and obtain an API key to get started.

— Integration with OpenHands:

- Use the OpenHands scaffold to enable agentic workflows. Set up a Docker container with the provided configuration and link it to a repository for tasks like test coverage analysis or feature implementation.

- Example command

export MISTRAL_API_KEY=<MY_KEY>

docker run -it --rm --pull=always -e SANDBOX_RUNTIME_CONTAINER_IMAGE=docker.all-hands.dev/all-hands-ai/runtime:0.39-nikolaik -e LOG_ALL_EVENTS=true -v /var/run/docker.sock:/var/run/docker.sock -v ~/.openhands-state:/.openhands-state -p 3000:3000 --add-host host.docker.internal:host-gateway --name openhands-app docker.all-hands.dev/all-hands-ai/openhands:0.39

— Experiment in a Sandbox:

- Developers are encouraged to test Devstral in a controlled environment, such as a sandbox repository, to evaluate its suggestions. Perform code reviews on AI-generated contributions to ensure quality, just as you would with human developers.

Mistral also provides comprehensive documentation and tutorials on their website (mistral.ai) to guide developers through setup and usage.

The Future of Devstral and Agentic Coding

Devstral’s release marks a significant step toward autonomous software engineering, where AI acts not just as a tool but as an integrated team member. Mistral has already hinted at a larger agentic coding model in development, potentially exceeding 30 billion parameters, which could further close the gap with proprietary giants like OpenAI and Google.

The collaboration with All Hands AI emphasizes generalization across different prompts and scaffolds, ensuring Devstral remains versatile for various development workflows. The open-source community is also invited to provide feedback to refine the model, fostering a collaborative approach to advancing AI-powered coding.

As agentic coding evolves, we may see workflows where developers define high-level designs, and Devstral handles implementation, testing, and iteration. This shift mirrors past automation trends, like version control and continuous integration, which transformed software development. With tools like Devstral, continuous autonomous coding could become the next layer in the development pipeline, boosting productivity and reducing manual overhead.

Code Implementations

Local vLLM Inference

vllm serve mistralai/Devstral-Small-2505 --tokenizer_mode mistral --config_format mistral --load_format mistral --tool-call-parser mistral --enable-auto-tool-choice --tensor-parallel-size 2

Mistral Inference

pip install mistral_inference --upgrade

from huggingface_hub import snapshot_download

from pathlib import Path

mistral_models_path = Path.home().joinpath('mistral_models', 'Devstral')

mistral_models_path.mkdir(parents=True, exist_ok=True)

snapshot_download(repo_id="mistralai/Devstral-Small-2505", allow_patterns=["params.json", "consolidated.safetensors", "tekken.json"], local_dir=mistral_models_path)

Huggingface Inference

pip install mistral-common --upgrade

import torch

from mistral_common.protocol.instruct.messages import (

SystemMessage, UserMessage

)

from mistral_common.protocol.instruct.request import ChatCompletionRequest

from mistral_common.tokens.tokenizers.mistral import MistralTokenizer

from huggingface_hub import hf_hub_download

from transformers import AutoModelForCausalLM

def load_system_prompt(repo_id: str, filename: str) -> str:

file_path = hf_hub_download(repo_id=repo_id, filename=filename)

with open(file_path, "r") as file:

system_prompt = file.read()

return system_prompt

model_id = "mistralai/Devstral-Small-2505"

tekken_file = hf_hub_download(repo_id=model_id, filename="tekken.json")

SYSTEM_PROMPT = load_system_prompt(model_id, "SYSTEM_PROMPT.txt")

tokenizer = MistralTokenizer.from_file(tekken_file)

model = AutoModelForCausalLM.from_pretrained(model_id)

tokenized = tokenizer.encode_chat_completion(

ChatCompletionRequest(

messages=[

SystemMessage(content=SYSTEM_PROMPT),

UserMessage(content="<your-command>"),

],

)

)

output = model.generate(

input_ids=torch.tensor([tokenized.tokens]),

max_new_tokens=1000,

)[0]

decoded_output = tokenizer.decode(output[len(tokenized.tokens):])

print(decoded_output)

Mistral AI’s Devstral: Revolutionizing Software Engineering with an Open-Source Agentic LLM was originally published in Data Science in Your Pocket on Medium, where people are continuing the conversation by highlighting and responding to this story.