LangChain’s Open SWE is an open-source, cloud-native, asynchronous coding agent designed to act like a real teammate: it plans, writes, tests, reviews code, and opens pull requests directly from GitHub issues or a web UI. Built on LangGraph and deployed on LangGraph Platform, it emphasizes long-running workflows, stateful execution, and human-in-the-loop control for complex engineering tasks rather than quick IDE autocompletions. Each task runs inside an isolated Daytona sandbox so the agent can safely execute shell commands, run tests, and install dependencies without touching a developer’s machine. This article explains how Open SWE works, what makes it different, how to use it, and how to adapt it to a team’s stack.

Why Open SWE Matters

Open SWE reflects a broader shift from short, synchronous copilots to autonomous agents that operate in the background, integrate with existing tools, and deliver completed work as PRs. By working against the source of truth (GitHub) and maintaining status in issues, it mirrors standard engineering rituals and fits into trunk-based development, CI, and code review flows. The architecture aims to reduce broken builds through a built-in Reviewer that checks and iterates before opening a PR, cutting down on noisy cycles and wasted reviewer time. Because sessions are cloud-hosted and asynchronous, teams can queue multiple tasks and return later to reviewed changes rather than babysitting an LLM in an IDE.

Core Capabilities

- GitHub-native workflow: Trigger via labels on issues (e.g., open-swe-auto), stream updates to the issue, and receive a linked PR at completion.

- Long-running, multi-step execution: Research codebases, generate plans, implement changes, run tests/linters, and revise code based on failures before PR creation.

- Safe, isolated runtime: Each task runs in a fresh Daytona sandbox VM-like environment with full shell access, enabling package installs and end-to-end test runs without local risk.

- Human-in-the-loop: Approve or edit plans before execution, interrupt or “double-text” during runs, and provide iterative guidance without restarting the agent.

- Open and extensible: Fork the repo, add tools, customize prompts, and wire in internal APIs to adapt the agent to proprietary stacks.

Architecture at a Glance

Open SWE uses a multi-agent workflow implemented in LangGraph with clearly defined roles and handoffs.

- Manager: Entry point that orchestrates state, routes tasks, and coordinates agent transitions across the workflow.

- Planner: Analyzes the repository and issue, searches files, and proposes a step-by-step execution plan for human review or automatic approval.

- Programmer: Executes the approved plan inside the sandbox, writes code, runs tests, and uses external docs/tools as needed to complete tasks.

- Reviewer: Validates outputs, runs checks, and sends issues back to the Programmer for fixes until the patch is ready, then opens a PR.

This pipeline trades raw speed for reliability: forcing a planning phase and a review phase avoids “code first, fix later” patterns that often break CI and cause drift in large repos.

How It Runs: Cloud, Persistence, and Isolation

Open SWE is designed for sessions that can last tens of minutes to hours, requiring durable state, repeatable environments, and unrestricted command execution within a safe boundary. LangGraph Platform provides the long-running orchestration and persistence, while Daytona provides per-task sandboxes akin to disposable VMs with file system, network, and shell access. This design allows the agent to fetch dependencies, run unit/integration tests, and iterate based on failures with no local machine setup, making it suitable for parallelizing multiple tasks or offloading heavy CI-like work.

Getting Started

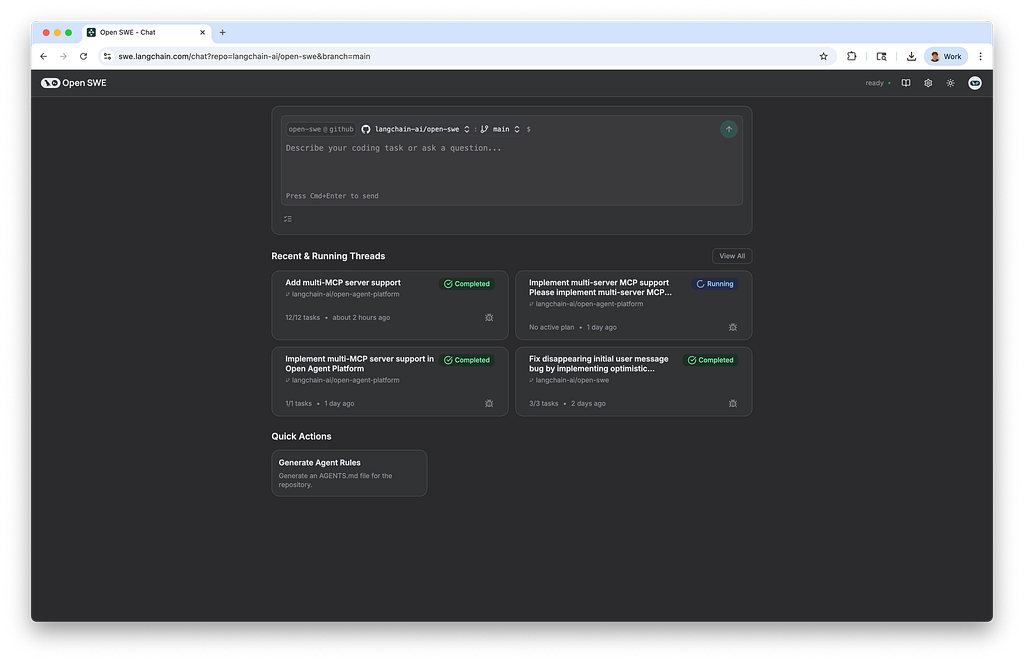

Hosted option: Teams can try the hosted UI at swe.langchain.com by connecting GitHub and supplying a model API key (e.g., Anthropic), then creating tasks or labeling issues to kick off runs. Self-hosting: The codebase is fully open source on GitHub, and LangChain notes enterprises can self-host via their own LangGraph API server or with the LangGraph Platform’s self-hosting offering. Documentation provides step-by-step usage for both web and GitHub-triggered workflows, including webhook setup, label conventions, and status updates inside issues.

Typical Workflow

- Create a task from an issue or the web UI: The Manager initializes state and passes control to the Planner.

- Planner drafts an execution plan: It examines relevant files, performs searches, and proposes steps for approval or auto-approval.

- Programmer implements in sandbox: It writes code, runs tests/linters, and searches external docs as needed, iterating on errors.

- Reviewer verifies and requests fixes: If checks fail, it loops back to Programmer until quality gates pass.

- PR creation and linking: The system opens a pull request and links back to the tracking issue with logs and artifacts.

This mirrors how a human engineer would work: understand the codebase, propose a plan, implement, test, and request review via PRs.

My book with 20+ End to End Data Science Case Studies from 5 different domains is available on Amazon.

Cracking Data Science Case Study Interview: Data, Features, Models and System Design

What It’s Good At (and Not)

Strengths: It shines on multi-file changes, refactors, test creation and repair, dependency updates, docs generation, and feature scaffolding where planning and iterative test runs provide leverage. It’s designed for long-running tasks where asynchronous execution and persistent state reduce friction compared with a chat-based assistant inside the IDE. Limitations: For trivial single-line fixes or tiny formatting changes, a heavy planning and review cycle may introduce overhead; LangChain notes a streamlined local/CLI mode is being developed for lightweight tasks. Performance and reliability will depend on the choice of underlying LLM, repository complexity, and the quality of CI/test suites to surface regressions during the review loop.

Comparisons and Ecosystem Context

Open SWE joins a growing family of autonomous coding agents, such as SWE-agent, that pursue end-to-end fixes from GitHub issues via tool-augmented LLMs and benchmark on SWE-bench. LangChain emphasizes direct GitHub integration, long-running LangGraph orchestration, Reviewer-gated PRs, and Daytona sandboxes as differentiators oriented toward production use rather than research-only runs. Community and coverage note that Open SWE can be self-hosted, is extensible, and is being used internally by LangChain, though some observers remain skeptical and stress the importance of rigorous evaluation on benchmarks and real-world repos.

Security and Operations

Security posture centers on disposable, isolated sandboxes per task, reducing risk from arbitrary shell execution and third-party dependencies compared with running agents locally. GitHub OAuth scopes should be minimally permissive, and organizations should enforce branch protections and required checks to ensure that only validated PRs are merged. LangSmith can be used alongside Open SWE for tracing and evaluation to audit agent behavior, improve prompts, and measure success rates across workflows.

Extending and Customizing

Because it’s open source and built on LangGraph, teams can add new tools (e.g., internal APIs, custom linters), adjust the multi-agent logic, or fine-tune prompts to match house style, test conventions, and architectural guidelines. The docs cover usage from GitHub and web UI, and the repository issues and PRs provide active patterns for contributions and bug fixes, indicating active development velocity. Daytona’s execution layer can be swapped or configured for different isolation policies if an organization has preferred sandbox or container solutions with comparable guarantees.

Practical Tips for Adoption

- Start with non-critical repos or low-risk issues to calibrate prompts, plan-review thresholds, and CI timeouts before expanding to core services.

- Prefer issues with clear acceptance criteria and good test coverage so the Reviewer loop provides strong signals for correctness.

- Use labels and templates to encode expectations for planning depth, coding standards, and required checks, so the agent’s plan can align with the team’s norms.

- Integrate observability with LangSmith to trace failures, prompt drifts, and tool errors, then iterate on agent behaviors and prompts.

- Keep humans in the loop: enforce plan approvals on unfamiliar codebases and require PR reviews like any other teammate for safety and knowledge sharing.

How to Try It Today

- Hosted trial: Connect GitHub and an Anthropic key at the Open SWE UI to delegate tasks and observe the end-to-end flow.

- Self-host: Clone the repository and follow docs for local or platform deployment, configuring GitHub webhooks and sandbox credentials per environment.

- GitHub-only triggers: Add open-swe or open-swe-auto labels to issues to let the agent pick them up and report status and PRs back in situ.

The Bottom Line

Open SWE operationalizes a production mindset for coding agents: long-running plans, stateful execution, explicit review stages, and GitHub-native delivery in PRs rather than ephemeral chat snippets. By pairing LangGraph orchestration with Daytona isolation, it can safely perform the end-to-end work a teammate would do — read, plan, code, test, fix, and submit — while remaining open source and extensible for real-world engineering teams. For organizations seeking to move beyond autocomplete toward durable automation, Open SWE offers a credible foundation that aligns with standard software workflows and can be tailored to enterprise constraints and practices

LangChain Open SWE: In‑Depth Guide to the Open-Source Asynchronous Coding Agent was originally published in Data Science in Your Pocket on Medium, where people are continuing the conversation by highlighting and responding to this story.