KAT-Dev-32B Unpacked: A 32B Open Coding Model Trained with Mid-Train, RFT, and Scaled Agentic RL

KAT-Dev-32B is a 32B-parameter open-weight model targeted at software engineering that combines mid-training, supervised fine-tuning, reinforcement fine-tuning (RFT), and a large-scale agentic RL stage to achieve competitive results on real-world code-editing tasks. On SWE-Bench Verified, it resolves 62.4% of issues, ranking among the top open-source models by fix rate while remaining locally runnable for advanced users.

Why it matters

KAT-Dev-32B adopts a pragmatic recipe: enhance core agent abilities during mid-training, curate diverse SFT tasks across programming scenarios, guide policy with “teacher trajectories” in RFT, then scale agentic RL with infrastructure that makes long-horizon trajectories tractable. This yields strong repair rates on repos in SWE-Bench Verified without relying on proprietary models or closed tool stacks.

Training pipeline

- Mid-training: Strengthens foundational abilities such as instruction following, tool-use, and multi-turn interaction to set the stage for subsequent tuning and RL, built on a Qwen3–32B base.

- SFT coverage: Eight task types across eight programming scenarios to broaden generalization beyond narrow benchmark tuning.

- Reinforcement fine-tuning (RFT): Teacher-trajectory–guided policy shaping before full RL stabilizes learning and improves sample efficiency for code-editing tasks.

- Agentic RL scaling: Introduces multi-level prefix caching for log-prob reuse, entropy-based trajectory pruning, and a SeamlessFlow-style architecture that decouples agents from the trainer while exploiting heterogeneous compute at scale.

Architecture and implementation notes

- Base backbone: Qwen3–32B dense architecture provides modern transformer components and strong language priors for code understanding.

- Dense vs MoE: KAT-Dev-32B is dense, so throughput per GPU may be lower than comparable-active-parameter MoE models, but it avoids MoE routing complexity and remains straightforward to fine-tune.

- Agent loop readiness: The model is tuned to work in multi-step edit-execute cycles typical of SWE-Bench-style environments, aligning with agent frameworks that call tools, run tests, and iterate.

Benchmarks

- SWE-Bench Verified: 62.4% resolved, placing 5th among open-source entries of varying sizes in public summaries and announcements. This positions KAT-Dev-32B near larger or closed alternatives while remaining open-weight.

- Positioning vs peers: Announcements compare KAT-Dev-32B to proprietary and open coding models; a related KAT-Coder variant reports 73.4% on SWE-Bench Verified but is not open-weight at release time.

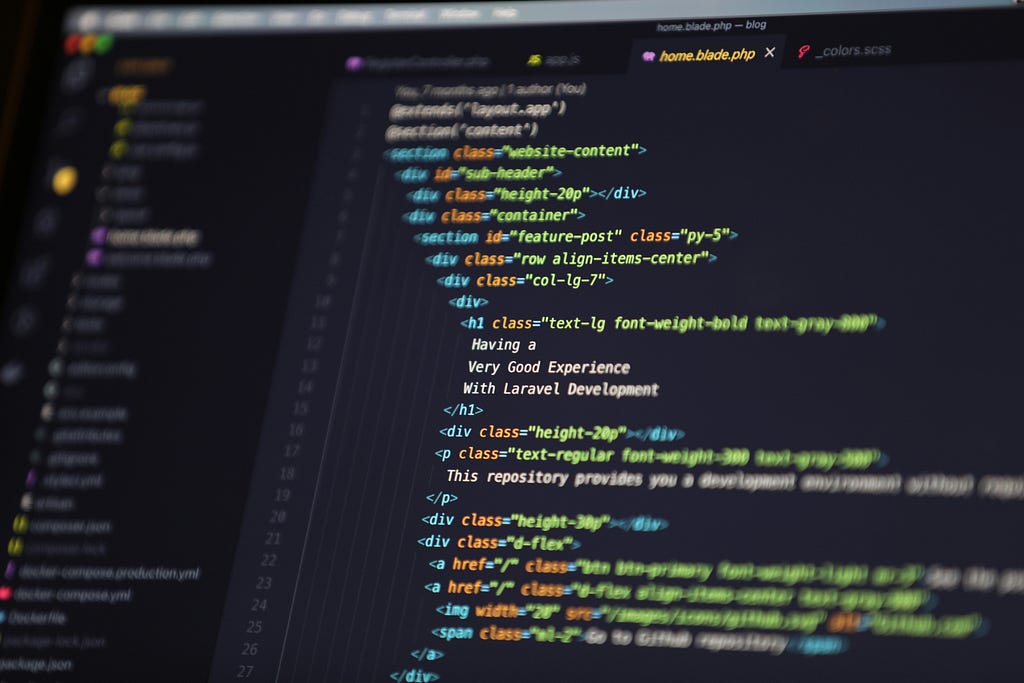

Inference: Hugging Face quick start

The Hugging Face model page provides a ready loader; below is a minimal chat-completions style scaffold for code tasks. Ensure sufficient VRAM (multi-GPU recommended), enable bfloat16/float16 as appropriate, and consider quantization for single GPU.

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

model_id = "Kwaipilot/KAT-Dev" # 32B variant hosted on Hugging Face

tokenizer = AutoTokenizer.from_pretrained(model_id, use_fast=False)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16 if torch.cuda.is_available() else torch.float32,

device_map="auto",

)

# Simple instruction template for a bug-fix task

system = "You are a senior software engineer. Provide minimal diffs and reasoning."

user = """Repository: mylib

File: src/utils/math.py

Test failure: test_divide_zero

Task: Fix divide(a,b) to raise ZeroDivisionError when b == 0.

Provide a unified diff patch only.

"""

messages = [

{"role": "system", "content": system},

{"role": "user", "content": user},

]def format_chat(messages):

# Qwen-style generic template; adapt to the repo's recommended template if provided

text = ""

for m in messages:

role = m["role"].capitalize()

text += f"{role}: {m['content']}n"

text += "Assistant:"

return text

prompt = format_chat(messages)

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

with torch.no_grad():

output_ids = model.generate(

**inputs,

max_new_tokens=1024,

temperature=0.2,

top_p=0.9,

do_sample=False, # deterministic for patches

eos_token_id=tokenizer.eos_token_id,

)

text = tokenizer.decode(output_ids[0][inputs.input_ids.shape[1]:], skip_special_tokens=True)

print(text)

Practical tips for coding tasks

- Decoding: Use low temperature and deterministic decoding for diffs; enable sampling only when brainstorming multiple solutions for later ranking.

- Context management: Provide failing tests, stack traces, and relevant file snippets. Keep context focused to reduce hallucinations and improve patch precision.

- Tool coupling: Integrate a runner that applies patches, runs tests, and feeds back errors; KAT-Dev-32B was optimized with agentic loops, so it benefits from iterative error feedback.

Fine-tuning guidance

- PEFT/LoRA: For domain adaptation (framework-specific codebases), apply LoRA on attention and MLP projections with ranks 16–64; train with instruction-tuned code tasks and real edit logs when available.

- RFT-style data: If available, curate “teacher” trajectories: stepwise edits with explanations and successful test runs, then use preference or discrepancy-based rewards before full RL to stabilize training.

- Eval harness: Recreate a mini SWE-like environment with hidden tests and strict diff application to catch regressions; report pass rates, edit distance, and revert rate.

Performance and hardware

- Throughput: Being dense, 32B will be slower tokens/sec than MoE peers at similar active parameters; plan batch sizes and KV cache accordingly. Quantization (8-bit/4-bit) can ease VRAM pressure, with some latency trade-offs.

- Memory: Multi-GPU setups (NVLink preferred) recommended for full-precision; single 48–80GB GPUs may work with quantization and careful max sequence lengths.

Roadmap and variants

- KAT-Coder: Higher-performing sibling (73.4% Verified) offered via API access; technical report and detailed training recipe indicated as forthcoming.

- Ongoing updates: The KAT-Dev repo and blog note continuing work on scaling RL and releasing more detailed evaluations; watch the model card and org site for changes.

Why KAT-Dev-32B stands out

- Methodological clarity: Mid-train → SFT → RFT → scaled agentic RL, with concrete engineering to make trajectory-heavy RL feasible.

- Open-weight accessibility: Strong Verified score while being runnable by practitioners with suitable hardware, enabling reproducible research and real-world integration.

If a production-ready agent loop is needed, a follow-up can include a minimal “edit-run-retry” harness with diffs, sandboxed execution, and automatic patch scoring aligned to SWE-Bench-style evaluation.

Notebook:

KAT-Dev-32B, Unpacked: A 32B Open Coding Model Trained with Mid-Train, RFT, and Scaled Agentic RL was originally published in Data Science in Your Pocket on Medium, where people are continuing the conversation by highlighting and responding to this story.